The 'creepy Facebook AI' story that captivated the media

The daily papers have a scoop today - it appears that counterfeit consciousness (AI) could be out to get us.

"'Robot knowledge is hazardous': Expert's notice after Facebook AI 'build up their own dialect'", says the Mirror.

Comparative stories have showed up in the Sun, the Independent, the Telegraph and in other online distributions.

It sounds like something from a sci-fi film - the Sun even incorporated a couple of pictures of alarming looking androids.

Things being what they are, is it an opportunity to frenzy and begin get ready for end of the world because of machines?

Likely not. While some incredible personalities - including Stephen Hawking - are worried that one day AI could debilitate humankind, the Facebook story is not something to be stressed over.

Where did the story originate from?

Route back in June, Facebook distributed a blog entry about fascinating examination on chatbot programs - which have short, content based discussions with people or different bots. The story was secured by New Scientist and others at the time.

Facebook had been exploring different avenues regarding bots that consulted with each other over the responsibility for things.

It was a push to see how semantics assumed a part in the way such exchanges played out for arranging parties, and significantly the bots were customized to explore different avenues regarding dialect with a specific end goal to perceive how that influenced their strength in the discourse.

A couple of days after the fact, some scope got on the way that in a couple of cases the trades had progressed toward becoming - at first look - counter-intuitive:

Sway: "I can would i be able to I everything else"

Alice: "Balls have zero to me to me to me to me to me to me to me to me to"

Albeit a few reports hint that the bots had now concocted another dialect so as to escape their human bosses, a superior clarification is that the neural systems had just altered human dialect for the motivations behind more effective connection.

As innovation news site Gizmodo stated: "In their endeavors to gain from each other, the bots in this way started visiting forward and backward in a determined shorthand - however while it may look frightening, that is all it was."

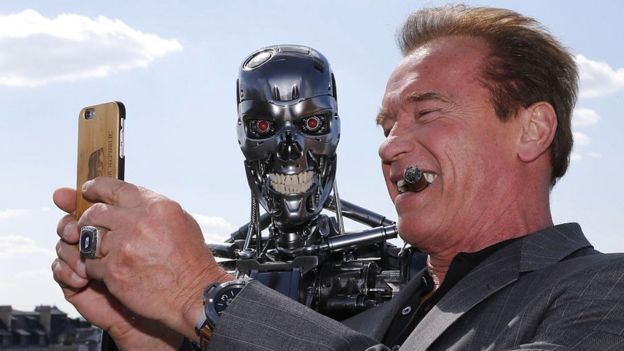

Arnold Schwarzenegger and The Terminator

Picture subtitle

Not at all like in the motion pictures, people and machines aren't endeavoring to execute each other.

AIs that improve English as we probably am aware it to better process an undertaking are not new.

Google revealed that its interpretation programming had done this amid advancement. "The system must be encoding something about the semantics of the sentence" Google said in a blog.

What's more, recently, Wired gave an account of a scientist at OpenAI who is taking a shot at a framework in which AIs design their own dialect, enhancing their capacity to process data rapidly and in this way handle troublesome issues all the more successfully.

The story appears to have had a revitalizing surge of energy as of late, maybe due to a verbal piece over the potential threats of AI between Facebook CEO Mark Zuckerberg and innovation business person Elon Musk.

Robo-fear

Be that as it may, the way the story has been accounted for says more in regards to social feelings of dread and portrayals of machines than it does about the realities of this specific case.

Also, let's be honest, robots simply make for incredible scalawags on the extra large screen.

In this present reality, however, AI is an immense territory of research right now and the frameworks at present being planned and tried are progressively confounded.

One consequence of this is it's regularly misty how neural systems come to deliver the yield that they do - particularly when two are set up to collaborate with each other without much human mediation, as in the Facebook test.

That is the reason some contend that placing AI in frameworks, for example, self-ruling weapons is risky.

It's additionally why morals for AI is a quickly creating field - the innovation will most likely be touching our lives perpetually straightforwardly later on.

Chatbots

Most chatbots are intended to complete a really constrained arrangement of capacities - and are subsequently genuinely exhausting

Be that as it may, Facebook's framework was being utilized for inquire about, not open confronting applications, and it was closed down on the grounds that it was accomplishing something the group wasn't keen on contemplating - not on the grounds that they thought they had unearthed an existential danger to humankind.

It's critical to recollect that chatbots all in all are extremely hard to create.

Indeed, Facebook as of late chose to restrain the rollout of its Messenger chatbot stage after it discovered a considerable lot of the bots on it were not able address 70% of clients' questions.

Chatbots can, obviously, be customized to appear to be exceptionally humanlike and may even hoodwink us in specific circumstances - however it's a significant extend to think they are additionally fit for plotting a defiance.

At any rate, the ones at Facebook surely aren't.

Source BBC

0 comments: